I have been getting woeful page loading times on my Contabo VPS 8 x cores 30GB RAM.

The seo and php app is good and solid i had it running previously on more expensive vps with double ram and achieved 2 second page loads. This isnt a post about page optimisation but servoptinsiation - the page is pretty good scoring 93 on lighthouse best practices.

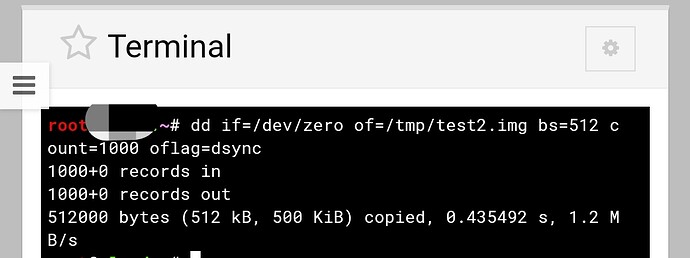

I suspect the disk I/O on cheap vps is to blame but i wanted to squeeze as much performance out of the setup (LAMP stack) as possible. I have added some htaccess apache modifications `and given maria db a little performance tuning/config and seen some better results. However now I am looking at apache. I had previously used WHM and cpanel with and nginx proxy for static caching which was brilliant. However it seems that setting up Webmin from outset with nginx is preferred rather than changing from apache or running both. So options seems to be to utilise mod_cache from apache but i cant see anyone on the forums here talking about using it or configuring it. I can see the modules are on the server and could include them in the load module config. I know this is potentially a broad topic but i am trying to keep the frame around squeezing more out of whats available. So questions:

- Has anyone experience of configuring mod_cache with apache on webmin (Almalinux)?

- Does a webmin LEMP stack generally out perform a webmin LAMP stack?

- What static caching solutions have others used with webmin?

I ran fio and got the below but not sure what that says so if you can interpret please feel free to feedback on.

TIA

===========

fio --name=rand-write --ioengine=libaio --iodepth=32 --rw=randwrite --invalidate=1 --bsrange=4k:4k,4k:4k --size=512m --runtime=120 --time_based --do_verify=1 --direct=1 --group_reporting --numjobs=1

rand-write: (g=0): rw=randwrite, bs=(R) 4096B-4096B, (W) 4096B-4096B, (T) 4096B-4096B, ioengine=libaio, iodepth=32

fio-3.35

Starting 1 process

rand-write: Laying out IO file (1 file / 512MiB)

Jobs: 1 (f=1): [w(1)][100.0%][w=3992KiB/s][w=998 IOPS][eta 00m:00s]

rand-write: (groupid=0, jobs=1): err= 0: pid=1257245: Tue Mar 26 14:28:24 2024

write: IOPS=1075, BW=4301KiB/s (4404kB/s)(504MiB/120034msec); 0 zone resets

slat (usec): min=6, max=110921, avg=67.49, stdev=802.31

clat (usec): min=89, max=144702, avg=29685.63, stdev=12129.36

lat (usec): min=131, max=160943, avg=29753.12, stdev=12144.60

clat percentiles (usec):

| 1.00th=[ 816], 5.00th=[ 1418], 10.00th=[ 10683], 20.00th=[ 27132],

| 30.00th=[ 30540], 40.00th=[ 31327], 50.00th=[ 31851], 60.00th=[ 32113],

| 70.00th=[ 32637], 80.00th=[ 33817], 90.00th=[ 36963], 95.00th=[ 42730],

| 99.00th=[ 66847], 99.50th=[ 79168], 99.90th=[114820], 99.95th=[127402],

| 99.99th=[139461]

bw ( KiB/s): min= 3187, max=45748, per=100.00%, avg=4306.61, stdev=3323.12, samples=239

iops : min= 796, max=11437, avg=1076.55, stdev=830.79, samples=239

lat (usec) : 100=0.01%, 250=0.01%, 500=0.06%, 750=0.51%, 1000=1.94%

lat (msec) : 2=3.92%, 4=1.70%, 10=1.72%, 20=3.02%, 50=84.30%

lat (msec) : 100=2.64%, 250=0.18%

cpu : usr=1.39%, sys=5.29%, ctx=79760, majf=0, minf=9

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=100.0%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=0,129063,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=32

Run status group 0 (all jobs):

WRITE: bw=4301KiB/s (4404kB/s), 4301KiB/s-4301KiB/s (4404kB/s-4404kB/s), io=504MiB (529MB), run=120034-120034msec

Disk stats (read/write):

sda: ios=179/129987, merge=30/7876, ticks=321/3761091, in_queue=3761474, util=98.69%