Operating system Ubuntu Linux 20.04.5

Webmin version 2.021

Virtualmin version 7.5-1

hello, i have a server that has 5 websites and the VPS cant handle it no more so im trying to change it to VDS, and im new to this can anyone tell me how to do the migration (copy everything…)???

A VPS should handle 5 website easy. I have 15 websites on a VPS.

Moving maybe a question for your provider. Else use the Vitualmn backup and restore.

@stefan1959 I have an issue with bandwidth one of the websites got a lot of users…

And i did talk to them before but they said they can do nothing(they dont offer any migration offers)…

Also does the backup and restore copy everything?? And how much does it take? Or does it depend on my internet speed??

If everything is in Virtualmin hosting yes. I do disaster restores to clean OS every month or so and its recovers the Virtualmin hosting without issue. Only thing you need to take note of is the webmin global settings. Maybe look at getting a provider with better bandwidth usage, aren’t VDS alot more expensive

@stefan1959 yes everything on Virtualmin hosting, and true VDS is more expensive…, and i did check other hosts but when u really think about it u paying extra money either for more bandwidth or more storage…im using contabo so i think VDS is better then other hosts…

thats a good isea to use the Vitualmn backup and restore but i dont know how to move it from server to server? plus i have like 300 GiB in storage…and my internet is bad ngl, u think i can do it fast or will it take a lot of time?!

One contabo pricing 400GiB storage on VPS is $40P/M v 360GiB storage on VDS is $103P/M big difference.

For 5 website you have to charge the customers alot to make money. The hosting maybe gearing for alot of downloading of files or something. Its not normal hosting by the sounds.

Well just create a new server install virtualmin and restore, its pretty easy.

Best to do a trial run. That why I care out a disaster recover ever so often, so I know exactly what to do if it ever happens and it not panic stations.

I backup to AWS and its easy to restore from there service.

[quote=“stefan1959, post:6, topic:123870”]

The hosting maybe gearing for alot of downloading of files or something. Its not normal hosting by the sounds.

[/quote]dow

@stefan1959 there is no dowload at all in the website, i think its as i thought someone is scraping from my website or something…

Idk whats disaster recover??!

And u mean AWS s3?

5 websites → you begin by thinking that is too much (I doubt it)

then you think that “web scrapers” are a problem (that is a different problem) and should be limited by simple code additions to your website. web scrapers are an intermittent nuisance they do not / should not download. they just view any site just like a genuine visitor. That is probably limited to your landing page by user access (that you can manage)

It is something you plan for when the world stops rotating! or there is a flood/earthquake/power cut/you as sysadmin gets run over/falls sick/becomes unable to keep your server operating.

I did think about it too at first, and used cloudflare “under attack mode” BUT its not working i still get visiters so i thought maybe they are just normal visiters since they also come from google!?

Let’s be clear and understand what web scraping really is.

It requires a website (some code hosted by a server) - A web scraper that requests your index.html page is going to give up very fast if all they get is not a 200 status response. or a shallow plain landing page. or a login page for the user. (that they may attempt to initiate a second request to - but that is a wholly different matter)

Web scrapers feed off of links. If your web page has a great deal of content (like a news site) there are other methods available to prevent/limit access. (most of which should be managed by the application’s code.

now I have my own opinions on cloudflare but I also think that is for DNoS type attacks rather than for requests. All requests that will end with a 200 response are genuine and should be dealt with securely by the site’s application. After all how can you tell the difference between a robot scraper and a genuine customer/visitor?

Yess the website have a great deal of content, so i think i should hire a developer since idk how to limit access…

I started getting a lot of unassigned visitors in google

analytics so i thought maybe its a scraper but when i did read what “unassigned” means i know it can be and it cant be scraper so idk tbh

I think i should limit the access as u said and see how it goes…

You have not indicated what these websites are (for example are they WP sites → ugh!) or how they were coded (PHP, Nodejs → what framework) it could be as simple as putting a rate-limiter in the middleware → that can have a dramatic effect on requests from bots, not absolute fix but so simple it can be worth it. It is a little less heavy handed than blocking specific IPs

Yes its a WP website, and idk if its PHP or which framwork it works with…

I mean he can be using proxy so limiting is the best ig

And thanks for the ideas @Stegan and @stefan1959

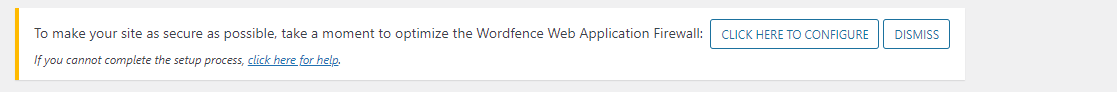

Have you install wordfence, free version is fine.

@stefan1959 i did use it before 2 years i remamber it was good untill it made my website login admin page stop so i deleted it…

never had a issue, it does allow 2FA maybe you turned that on.

No i didnt, i just remamber it was a conflict between plugins.

I will add it again right now and see if it does the job…

@stefan1959 i used it today and its good also it have the “limite access”

but dont use this

idk how but it will redirect your website from home page to another scam website full of ads…