Howdy all,

I don’t pay much attention to website analytics (probably to the detriment of our ability to make money), but I happened to notice that last month was our highest traffic to the forum (probably) ever with 1.9 million pageviews, which is nice. It’s nice to know folks are still finding what we’re doing useful, and it’s a confirmation that moving to Discourse was a great choice.

I qualified the above statement with “probably” because there’s an outlier on this chart that I can’t explain. Actually, a couple of outliers I can’t explain. One is March of 2022 with over 2 million pageviews. I’m suspicious of that because it is surrounded by months with dramatically fewer views. I think that indicates uncategorized crawlers (maybe “AI” crawlers, as they’ve been shown to be hiding the nature of their business), while this year we’ve seen steadily increasing visitors with nothing looking like a crazy jump. The other strange jump is from May to June of 2021. I don’t think that is mostly attributable to crawlers, more likely just a flurry of activity because of new releases of stuff, but maybe it was the beginning of the “AI” feeding frenzy.

I suspect the increase in crawler activity (purple) comes down to there actually being more crawlers (because “AI”) and also more crawlers being properly detected that were missed before.

I don’t have a great feel for how much of this is actual humans, but we can see that posts and interactions have been steadily, if slowly, increasing ever since we launched the Discourse forum in 2020, though much more slowly than the visitor count. Since I’m utterly ruthless about deleting LLM-generated posts (when I recognize them), and blocking users that use LLM-generated text, I think we’re seeing mostly human interactions. If you’re a human, and you’ve posted, thanks! If you’re not human, I’m gonna block you.

One reason I find this encouraging is that most StackOverflow sites have seen a dramatic decrease in usage since LLMs have come along, and I find that worrying, for a few reasons. One reason I’m worried about it is that LLMs are wrong so much of the time. I know from talking to customers and users who’ve tried to get answers out of ChatGPT, that it very often leads them down absolutely insane paths, getting them into a much deeper mess much faster than spending a few minutes reading documentation or asking here would have done. It sounds so confident, and is right some of the time, so it kind of overloads our brain into doing whatever it says. Like driving into a lake because the GPS told you to.

Anyway, the fact that we aren’t seeing a decline in real human users having actual human conversations, and in fact, those conversations have been consistently increasing over time, is heartening. We’re not just talking to ourselves here, we’re helping a lot of people. I’ve seen studies that indicate that orders of magnitude more people read forums than post to them, and these numbers bear that out. So, ask your questions, folks. It’ll probably help somebody else, maybe dozens or hundreds of somebodies. When you ask ChatGPT, it doesn’t help anybody else (and it might not help you, either, since you’re gonna have to fact-check it…you have to be cautious about stuff you get from forums, too, but at least in a forum, you’ll get some other opinions if someone is just plain wrong).

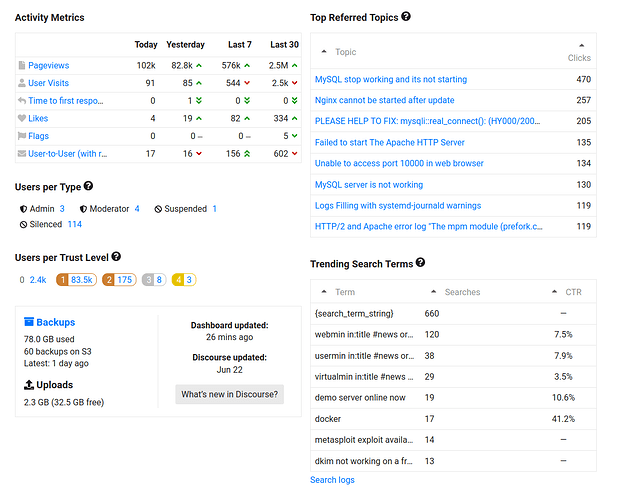

I don’t really have a point here. Just thought others might be interested in the state of the community. One more chart: